TL;DR: Year-end metrics often tell the most comforting version of the story, not the most accurate one. Completion rates, training counts, and green dashboards reflect activity, not readiness. Real change success shows up later, in confidence, coherence, and capacity. The most valuable year-end insights come from listening for uncertainty, friction, and workarounds, not from celebrating closure. The practitioners who add the most value are the ones who help leaders measure what will determine whether change actually sticks.

The end of the year is metric season.

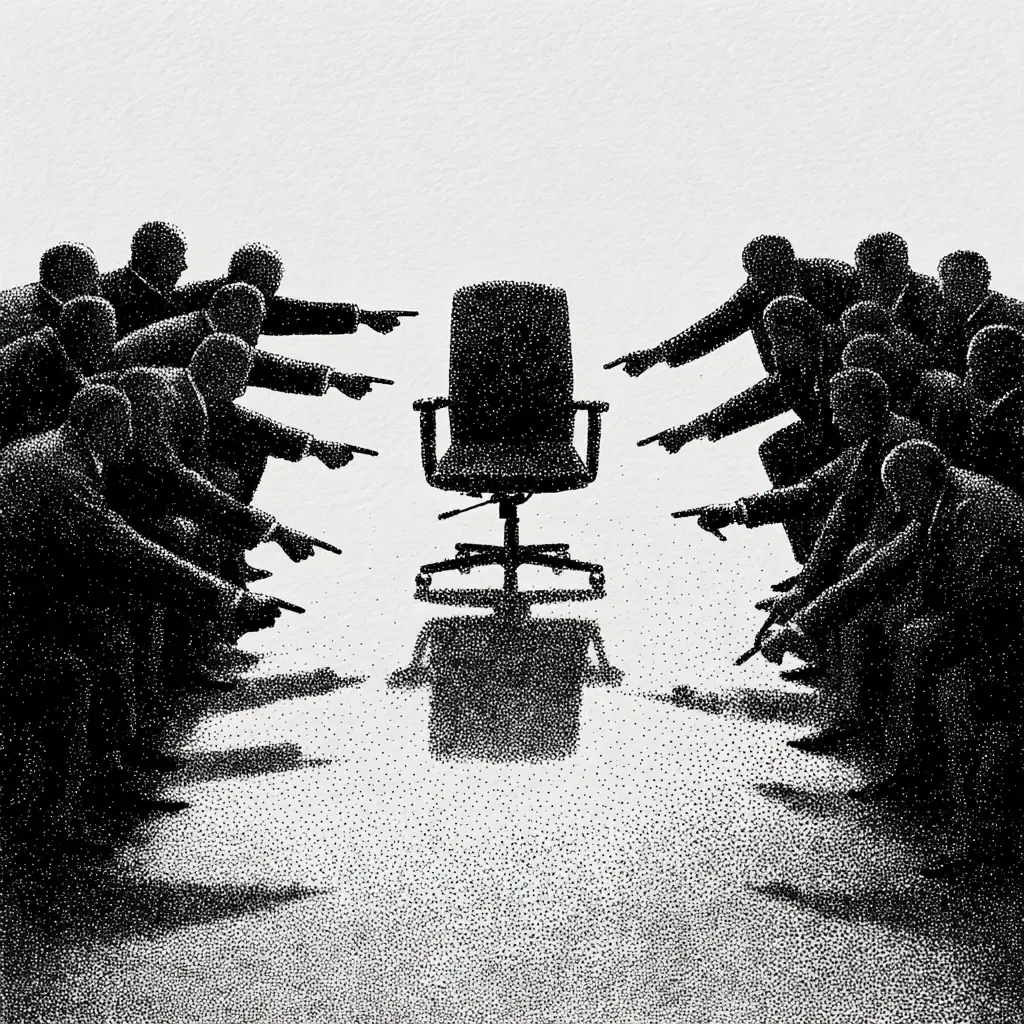

Dashboards are refreshed. Statuses turn green. Reports emphasize completion, coverage, and compliance. Leaders look for reassurance that the year’s investments delivered what they promised.

The problem is not measurement itself. The problem is timing.

Many of the metrics we rely on at year-end tell comforting stories at precisely the moment when honesty would be more useful.

Why Year-End Numbers Are So Misleading

Year-end metrics are shaped by incentives as much as reality.

Teams want to close strong. Programs are under pressure to demonstrate progress. Nobody benefits from surfacing ambiguity when budgets, reputations, and roadmaps are on the line.

As a result, indicators tend to favor what is easiest to show:

- Deliverables completed

- Communications sent

- Training sessions delivered

- Milestones technically achieved

These measures are not false. They are simply incomplete. They describe activity, not assimilation.

Change, however, does not resolve on a fiscal calendar.

The Completion Trap

One of the most persistent illusions in change work is that completion equals success.

A system can be live without being stable. A workforce can be trained without being confident. A process can be adopted on paper while quietly bypassed in practice.

Year-end metrics often reward the appearance of finish lines, even when the work of integration is just beginning. This creates a dangerous gap between reported progress and lived experience.

Practitioners feel this gap acutely. It shows up in support tickets, workarounds, and whispered frustration long after the metrics declare victory.

What Traditional Metrics Miss

Most year-end change measures fail to capture three critical dimensions:

Confidence.

Do people believe they can operate effectively in the new environment without constant support?

Coherence.

Do changes make sense together, or do they feel like disconnected demands competing for attention?

Capacity.

Does the organization have the bandwidth to sustain the change, or is it borrowing energy from the future?

These factors are harder to quantify, which is precisely why they are often ignored. Yet they are far better predictors of long-term outcomes than attendance rates or artifact counts.

Measuring Readiness Instead of Readout

If year-end metrics are meant to inform the next phase of work, then they should focus less on what happened and more on what is likely to happen next.

Readiness-based measures shift the conversation from performance reporting to risk awareness.

Examples include:

- Where do teams still rely on informal workarounds?

- Which roles express uncertainty rather than resistance?

- What decisions are being deferred because the new model feels unclear?

These questions surface friction early, while it is still manageable.

Listening as Measurement

Some of the most valuable data at year-end is qualitative.

Patterns in manager conversations. Themes in support requests. The language people use when describing the change without leadership present.

This is not anecdotal noise. It is signal.

Organizations that treat listening as a form of measurement develop a far more accurate picture of where they stand. They also build credibility by demonstrating that metrics are not just about validation, but about learning.

Redefining What “Good” Looks Like

Year-end reporting often asks whether the change was successful. A more useful question is whether the organization is positioned to succeed next.

That reframing changes what counts as good news.

Acknowledging uncertainty becomes a strength. Naming unfinished work becomes responsible. Highlighting recovery needs becomes strategic rather than inconvenient.

Practitioners who can help leaders see this shift provide enormous value, even when the story is less tidy than the dashboard suggests.

Carrying Better Measures Forward

The goal is not to abandon metrics, but to evolve them.

Completion metrics still matter. They simply should not be mistaken for outcomes. Pairing them with indicators of confidence, coherence, and capacity creates a more truthful picture.

This is especially important at year-end, when decisions made on partial data shape priorities for months to come.

Final Thought

Year-end metrics are not wrong, but they are rarely sufficient. The most effective change practitioners know how to read past the numbers and help organizations measure what actually determines whether change will hold.

ChangeGuild: Power to the Practitioner™

Now What?

- Separate completion from readiness in your reporting

When reviewing year-end metrics, explicitly label which indicators show activity and which indicate readiness. Make the distinction visible so leaders do not confuse finished work with sustainable change. - Add one readiness question to every status review

Ask a simple forward-looking question: What still feels fragile right now? Track the answers over time. Patterns matter more than precision. - Mine support data for signal, not volume

Look beyond ticket counts and training attendance. Analyze themes in repeat questions, workarounds, and escalations. These reveal where confidence and coherence are breaking down. - Use manager conversations as an early warning system

Equip people leaders with a short prompt set to surface uncertainty, not just compliance. What managers hear in side conversations is often the most accurate indicator of post–year-end risk. - Reframe unfinished work as a planning asset

Treat what did not stabilize by year-end as input to next-year priorities, not failure. Naming recovery needs early protects capacity and prevents quiet erosion later.

Frequently Asked Questions

Why are year-end metrics so misleading?

Because they are optimized for closure, not truth. Year-end metrics often reflect what was easiest to measure or most defensible to report, not what actually determines whether change will hold once attention moves on.

Does this mean traditional KPIs are useless?

No. Completion metrics, training counts, and delivery milestones still matter. They just cannot stand alone. Without measures of confidence, coherence, and capacity, they give an incomplete and sometimes misleading picture.

What should leaders look at instead of completion rates?

Leaders should look for signals of readiness: where people still hesitate, where workarounds persist, where decisions stall, and where support demand remains high. These indicators are better predictors of future performance than check-the-box metrics.

How can organizations measure “confidence” or “coherence” without surveys?

Listen to how people talk about the change. Review patterns in support tickets, manager feedback, escalation themes, and informal conversations. Qualitative data, when reviewed consistently, is often more honest than formal surveys.

Isn’t focusing on uncertainty risky at year-end?

Only if the goal is reassurance instead of resilience. Surfacing uncertainty early allows organizations to adjust priorities, protect capacity, and prevent burnout or failure later. Avoiding it simply delays the cost.

How can practitioners use this without sounding negative?

By framing gaps as forward-looking insight, not criticism. The goal is not to undermine progress, but to make next-year decisions smarter by acknowledging what still needs support.

What’s the biggest mistake teams make with year-end reporting?

Confusing activity with adoption. When teams assume that delivery equals durability, they miss the chance to stabilize change before it becomes fragile or costly to fix.

This post is free, and if it supported your work, feel free to support mine. Every bit helps keep the ideas flowing—and the practitioners powered. [Support the Work]