TL;DR: AI transformations often fail first in shared services not because the technology is flawed, but because these teams absorb complexity without authority. Automation removes the human buffer that quietly keeps systems functioning, causing exceptions to spike, trust to erode, and workarounds to multiply. Organizations that succeed redesign decision rights and exception handling before automating. Those that do not discover too late that AI didn’t break their operations — it revealed how fragile they already were.

When AI enters an organization, shared services feel it first.

Finance. HR. IT. Procurement. Customer operations.

These teams sit at the crossroads of process, policy, and scale. They are centralized. Measured. Instrumented. They touch everything. Which is precisely why organizations keep starting their AI journeys there.

On paper, it makes perfect sense.

In practice, it is where AI transformations most often begin to fracture.

Not because shared services are weak.

But because they are exposed.

The Hidden Role Shared Services Play

Shared services exist to standardize work across complexity.

They translate policy into action.

They absorb variation across business units.

They resolve edge cases that never make it into documentation.

They are not just operational engines. They are interpretive layers.

And that distinction matters.

AI systems are excellent at pattern recognition.

Shared services live in the space between patterns.

That tension is manageable at low volume. At enterprise scale, it becomes structural.

Why AI Gets Deployed Here First

Organizations almost always start AI in shared services for the same reasons:

- The data is relatively clean

- The workflows are documented

- The ROI is easier to model

- The work appears repeatable

- The cost pressure is constant

From a transformation perspective, shared services look “ready.”

What that framing misses is how much of the work depends on judgment, escalation, and informal coordination that exists outside the process map.

The very things that make shared services function well are usually invisible to the systems being introduced.

What Automation Actually Removes

In mature shared services environments, people compensate for design gaps every day.

They:

- Notice when inputs technically comply but violate intent

- Catch inconsistencies between systems

- Smooth handoffs before they escalate

- Resolve conflicts that policy never anticipated

This work is rarely documented. It is rarely measured. It is almost never credited.

AI does not replace this work by default.

It removes the buffer that made the work survivable.

When automation increases throughput without redesigning decision logic, the system doesn’t become more efficient. It becomes more brittle.

Errors propagate faster.

Exceptions multiply.

Escalations rise.

And the people left in the loop now spend their time cleaning up after the system instead of preventing failures upstream.

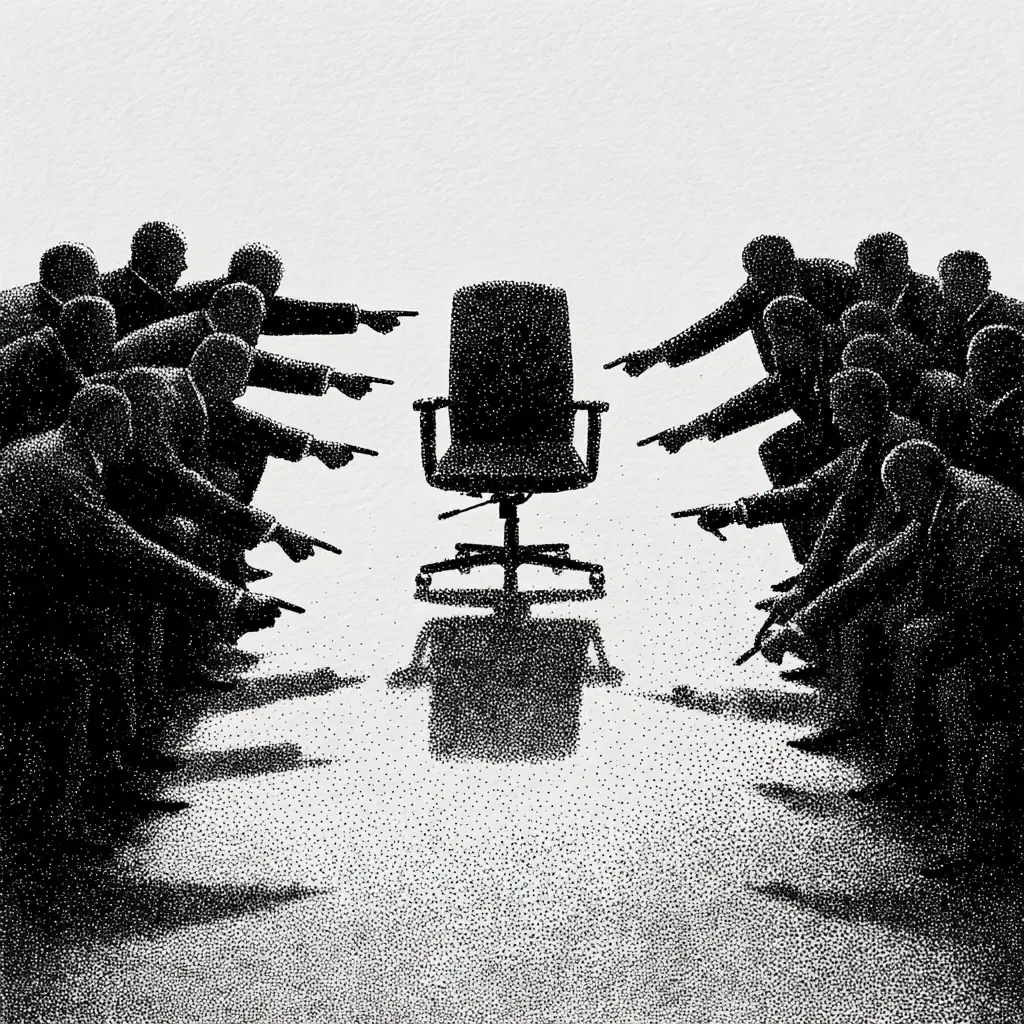

The Blame Always Lands in the Same Place

When things go wrong, shared services take the hit.

Finance becomes “too rigid.”

HR becomes “impersonal.”

IT becomes “the blocker.”

Procurement becomes “slow.”

What rarely gets acknowledged is that these teams are enforcing decisions embedded upstream — in tools, policies, and assumptions they did not create and cannot easily override.

They are accountable without authority.

That asymmetry is not a people problem.

It is a design problem.

The Exception Trap

AI systems in shared services are optimized for the norm.

But organizations do not run on norms. They run on edge cases.

As exceptions accumulate, three things happen:

- Human intervention increases rather than decreases

- Work becomes harder to explain and harder to train

- Experienced staff burn out while new hires struggle to ramp

On dashboards, performance looks stable.

On the ground, complexity explodes.

The organization believes it is becoming more efficient.

The people inside it know it is becoming more fragile.

Why This Spreads Beyond Shared Services

Once trust erodes inside shared services, the effects ripple outward.

Business units start working around the system.

Leaders escalate issues directly to individuals.

Shadow processes multiply.

Standardization quietly collapses.

The very thing shared services were designed to create — consistency — disappears.

And AI, which was supposed to streamline operations, ends up accelerating fragmentation.

What Organizations Get Wrong

Most responses focus on improving the technology.

More training.

Better data.

Additional rules.

Tighter controls.

These interventions miss the point.

The problem is not that shared services are bad candidates for AI.

The problem is that they are being asked to absorb complexity without a corresponding redesign of:

- Decision rights

- Escalation authority

- Exception ownership

- Human judgment boundaries

Without those changes, AI simply amplifies the mismatch between how work is imagined and how it actually happens.

What Resilient Organizations Do Differently

Organizations that avoid this trap make different choices.

They redesign processes before automating them.

They define which decisions AI can make and which must remain human.

They treat exception handling as a core capability, not a failure mode.

They give shared services real authority, not just responsibility.

Most importantly, they involve shared services early — not as implementers, but as co-designers of the operating model.

The Practitioner’s Role

This is where change practitioners matter most.

Not as trainers.

Not as communicators.

But as translators of invisible work.

Practitioners are often the only ones who can:

- Surface the hidden labor AI displaces

- Show where efficiency gains are being paid for elsewhere

- Connect exception volume to structural design flaws

- Make the cost of “invisible work” visible to leadership

Without that intervention, shared services quietly absorb the damage until something breaks.

And when it does, the failure is blamed on execution instead of design.

Final Thought

Shared services do not fail first in AI transformations because they are weak.

They fail first because they sit at the intersection of scale, policy, and human consequence.

They are where organizational assumptions collide with reality.

Organizations that strengthen shared services before automating them build resilience into the system.

Organizations that do not will discover — usually too late — that AI did not break their operations.

It simply revealed how fragile they already were.

ChangeGuild: Power to the Practitioner™

Now What?

1. Map where human judgment actually happens

Do not rely on process maps alone. Identify where people override systems, interpret policy, or resolve gray areas. That is where AI will create pressure first.

2. Separate automation from authority

If a team is responsible for outcomes, they must have the authority to override or pause AI-driven decisions. Accountability without control is a structural failure.

3. Treat exceptions as signal, not noise

Rising exception volume is not a training problem. It is feedback that the system design does not match reality.

4. Redesign before you automate

If a process relies on informal coordination to work, automation will amplify its weaknesses. Fix the design first, then scale it.

5. Use change practitioners as system translators

Practitioners are uniquely positioned to see where efficiency gains are being paid for elsewhere. Use them to surface invisible work before it becomes institutional debt.

Frequently Asked Questions

Why do shared services struggle first during AI adoption?

Because they operate at the intersection of scale, policy, and exception handling. AI removes the human buffer that quietly resolves ambiguity, exposing weaknesses in process design.

Is AI the problem in these cases?

No. The issue is not the technology itself but the assumption that automation can replace judgment without redesigning authority and escalation structures.

Why do exception volumes increase after automation?

AI systems optimize for norms. Real organizations operate on edge cases. When exceptions are not designed for, they multiply rather than disappear.

What role should change practitioners play in AI transformations?

They should surface invisible work, translate exception patterns into design insights, and help leaders understand where automation is shifting risk rather than eliminating it.

How can organizations prevent shared services from becoming bottlenecks?

By involving them early in AI design, granting real decision authority, and treating exception handling as a core capability instead of a failure.

This post is free, and if it supported your work, feel free to support mine. Every bit helps keep the ideas flowing—and the practitioners powered. [Support the Work]