TL;DR: AI is quietly pushing HR out of its strategic role and into the position of risk governor. When HR is brought in late to approve or defend AI-driven people decisions it did not design, it absorbs blame without having real influence. To stay credible, HR must reclaim a seat at the design table and shape how AI is used before governance and damage control become its default job

For years, HR worked to reposition itself as a strategic partner.

Closer to the business. Aligned with talent strategy. Focused on capability, engagement, and leadership rather than just policy and compliance.

AI is quietly pulling HR in the opposite direction.

As algorithmic decision-making spreads across hiring, performance, scheduling, learning, and workforce planning, HR is increasingly being asked to play a different role.

Not architect of experience.

Not steward of culture.

But governor of risk.

How HR Became the Default Owner of AI Risk

When AI enters people-related decisions, uncertainty follows.

Bias concerns. Regulatory exposure. Legal defensibility. Ethical questions about surveillance, fairness, and transparency.

These issues do not fit neatly into IT, legal, or operations alone. They land, almost by default, in HR.

HR owns the policies. HR owns employee relations. HR owns the fallout when systems produce outcomes that feel unjust or harmful.

Over time, HR becomes the place where AI risk is contained, even when it was never empowered to shape the underlying design.

The Shift from Enablement to Enforcement

This creates a subtle but significant role shift.

Instead of asking how AI can enhance talent and experience, HR is asked to answer different questions:

Is this compliant?

Is this defensible?

Is this allowed?

These are necessary questions. They are also constraining.

When HR’s primary contribution becomes gatekeeping, its strategic influence narrows. The function is seen as slowing things down rather than shaping better outcomes.

This is not because HR lacks vision, but because it is being positioned late in the decision cycle.

Why This Is a Losing Position

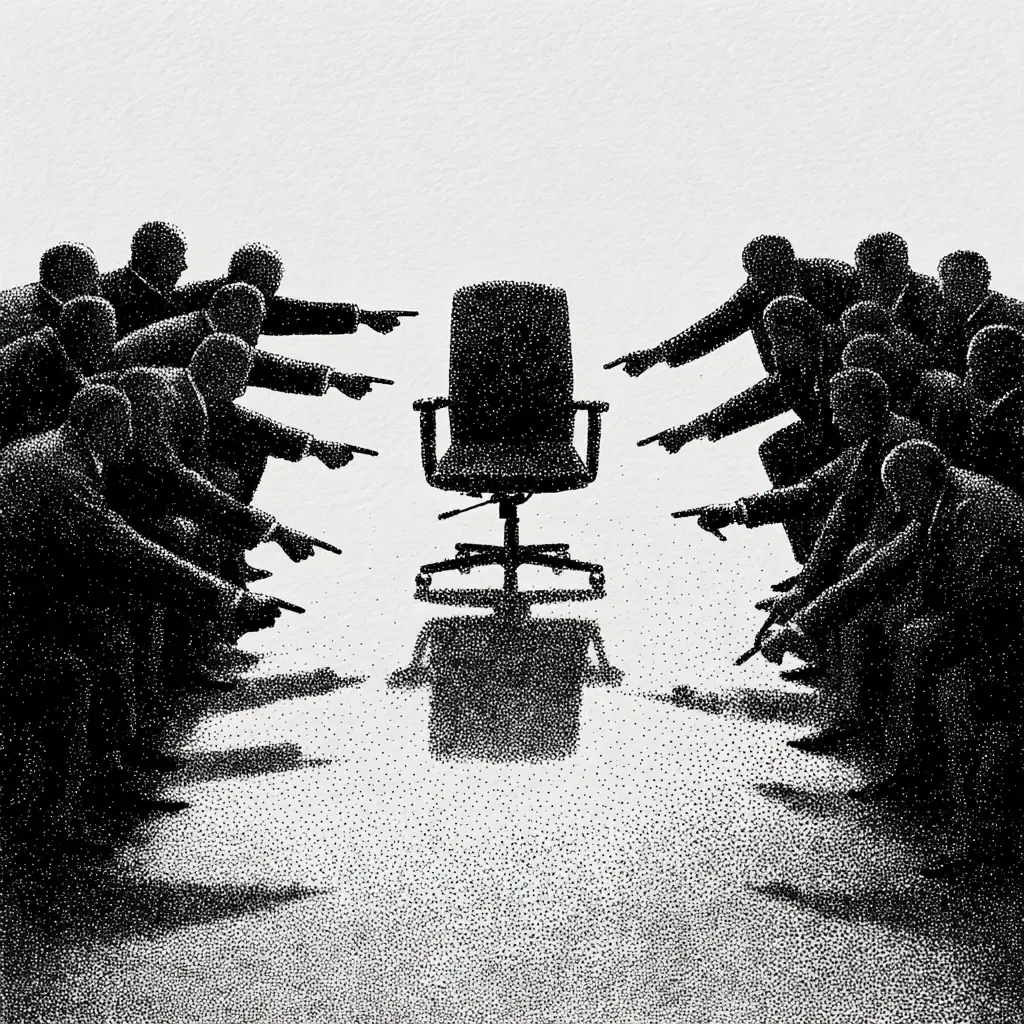

Risk governance without design authority is an impossible mandate.

HR is expected to approve systems it did not help architect. To defend outcomes it cannot fully explain. To manage employee reaction to tools it did not select.

When problems emerge, HR absorbs the blame.

Employees see HR as the face of decisions that feel impersonal. Leaders see HR as the barrier to speed. The function is caught in the middle, accountable without control.

This dynamic is not sustainable.

The Illusion of Neutrality

AI governance often leans heavily on the language of neutrality.

Algorithms are framed as objective. Data-driven decisions are framed as fairer than human judgment. Policies are written to emphasize consistency.

In practice, neutrality is a design choice, not a fact.

Every model reflects priorities. Every metric encodes values. Every threshold creates winners and losers.

When HR is asked to defend AI systems as neutral, it is being asked to obscure these tradeoffs rather than surface them.

That undermines trust.

What HR Actually Needs to Be Effective

For HR to play a constructive role in AI adoption, its mandate must change.

HR needs to be involved earlier, when decisions about scope, use cases, and constraints are still open. It needs authority to influence how AI interacts with work, not just whether it is allowed.

This includes:

- Shaping what decisions should never be fully automated

- Defining where human judgment must remain primary

- Establishing explainability standards employees can understand

- Protecting managers’ ability to intervene meaningfully

Without this influence, HR’s role collapses into damage control.

The Risk of Staying Silent

Some HR teams choose a quieter path.

They approve cautiously. They document concerns. They hope issues can be addressed later.

This silence is understandable, but costly.

AI systems entrench quickly. Once embedded, they are difficult to unwind. What feels like a pilot becomes infrastructure. What feels like experimentation becomes expectation.

By the time harm is visible, HR’s leverage has diminished.

Reclaiming HR’s Strategic Position

HR’s AI moment is not about becoming more technical. It is about becoming more assertive.

Assertive about design responsibility.

Assertive about human impact.

Assertive about where efficiency must yield to dignity and trust.

This requires reframing AI conversations away from “can we” and toward “should we,” “where,” and “under what conditions.”

HR that can hold this line strengthens its strategic relevance rather than sacrificing it.

The Practitioner’s Role

Change practitioners and HR leaders share this challenge.

They are often brought in late, asked to legitimize decisions already made. Their value lies in helping organizations slow down just enough to see consequences before they harden.

They translate employee experience into risk insight. They connect system behavior to cultural impact. They help leaders understand that governance is not the same as stewardship.

Final Thought

AI is forcing HR into a choice it did not ask for.

Either accept the role of risk governor without real influence, or reclaim a seat at the design table where human outcomes are shaped.

The future credibility of HR depends on which path it takes.

ChangeGuild: Power to the Practitioner™

Now What?

If HR is being pulled into the role of AI risk governor, these are five concrete ways to reclaim influence before that role hardens.

- Insert HR upstream, not at approval time

Refuse to engage only at the “is this compliant?” checkpoint. Push for participation when AI use cases are being defined, not after tools are selected. If HR is not present when scope, data sources, and decision boundaries are set, governance becomes performative rather than protective. - Define non-automatable decisions explicitly

Work with leaders to document which people-related decisions should never be fully automated. Promotions, terminations, performance ratings, and disciplinary actions often require human judgment by design. Make this explicit, written, and visible before exceptions become the norm. - Demand explainability in employee language

If HR cannot explain how an AI-driven decision works in plain language, employees will not trust it. Require vendors and internal teams to produce explanations that a manager can actually use in a conversation, not just technical documentation or legal disclaimers. - Treat neutrality claims as design discussions

When AI is described as “objective” or “neutral,” slow the conversation down. Ask what outcomes the system optimizes for, which tradeoffs it accepts, and who absorbs the downside. Reframe neutrality as a set of choices, not a shield against accountability. - Build escalation paths before harm appears

Establish clear processes for managers and employees to challenge AI-driven outcomes early. Waiting until issues surface informally or emotionally erodes trust. A visible, legitimate escalation path signals that human judgment still matters.

Frequently Asked Questions

Is HR really becoming the owner of AI risk?

In practice, yes. When AI affects hiring, performance, scheduling, or workforce decisions, the consequences surface as employee relations issues, compliance concerns, or trust breakdowns. Those outcomes typically land with HR, even if HR did not select or design the system.

Why doesn’t this responsibility sit with IT or Legal instead?

IT focuses on technical performance and integration. Legal focuses on defensibility and regulatory exposure. Neither owns day-to-day employee experience or the cultural impact of decisions. HR becomes the default owner because it manages policies, people fallout, and credibility with the workforce.

Is AI governance the same thing as HR strategy?

No. Governance is about limits, controls, and risk mitigation. Strategy is about shaping outcomes. When HR is involved only in governance, it loses the ability to influence how AI reshapes work, capability, and trust. Effective HR involvement requires both.

What’s the risk of HR staying quiet or cautious?

Silence allows AI systems to harden into infrastructure. What begins as a pilot quickly becomes standard practice. Once embedded, reversing or redesigning AI-driven decisions becomes politically and operationally difficult, reducing HR’s leverage over time.

Does this mean HR needs to become more technical?

Not primarily. HR does not need to build models or write code. It needs authority to influence design decisions, ask better questions about tradeoffs, and translate human impact into business risk leaders understand.

What’s the biggest mistake HR teams make with AI today?

Accepting responsibility for outcomes without demanding influence over design. Risk ownership without design authority puts HR in a no-win position, accountable for decisions it did not shape and expected to defend systems it cannot fully explain.

This post is free, and if it supported your work, feel free to support mine. Every bit helps keep the ideas flowing—and the practitioners powered. [Support the Work]