TL;DR: AI did not make work worse. It made bad work cheaper, faster, and easier to defend. The result is not low-quality output. It is a flood of plausible, polished content that consumes attention without improving decisions. Welcome to the slop economy.

The Problem Is Not That AI Is Bad

It is that AI removed friction.

Before generative AI, producing bad work took time. You had to write the memo. Build the slide. Draft the strategy doc. Even if the thinking was shallow, effort acted as a natural limiter.

AI removed that limiter.

Now organizations can generate unlimited outputs that look finished, sound reasonable, and feel productive. The cost of producing content has collapsed. The cost of evaluating it has not.

That imbalance is what creates slop.

What “AI Slop” Actually Is

AI slop is not wrong information.

It is not hallucinations.

It is not people being lazy.

AI slop is highly plausible, low-accountability output that absorbs attention without creating clarity.

It has a few consistent traits:

- It sounds confident but avoids commitment

- It summarizes without deciding

- It lists options without tradeoffs

- It feels “done” while leaving all judgment to the reader

Slop survives because it is defensible. You can forward it. Attach it. Paste it into a deck. No one can easily challenge it because it does not assert enough to be wrong.

The Slop Economy at Work

The slop economy emerges when three things happen at once:

1. Volume replaces value

Output becomes the proxy for progress. More documents, more decks, more summaries. Less thinking.

2. Plausibility beats insight

Work is rewarded for sounding right, not for being useful. AI excels here.

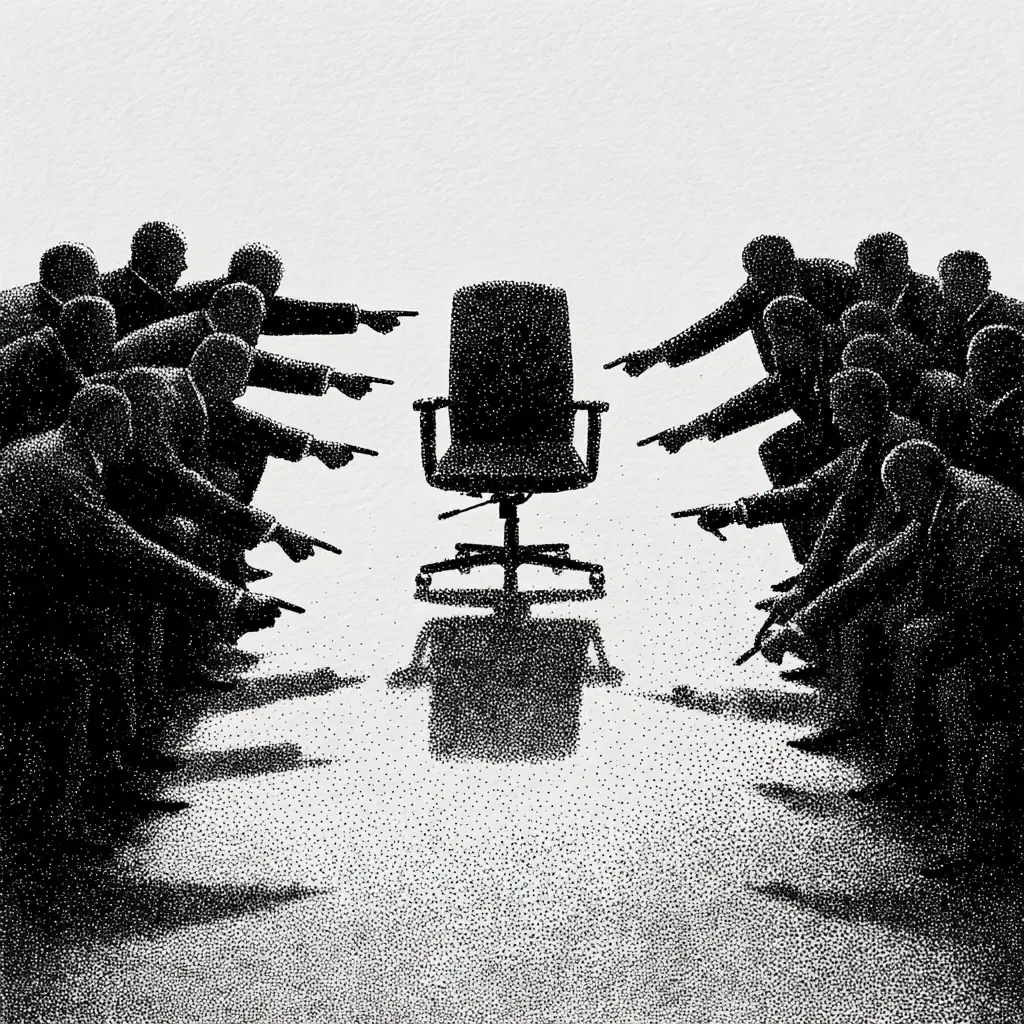

3. Accountability evaporates

“The model produced this” quietly replaces ownership. Decisions become optional.

In this environment, AI is not the cause. It is the accelerant.

Why Slop Is So Hard to Kill

Slop survives because it solves real organizational anxieties.

- Leaders are overloaded and want fast inputs

- Teams are risk-averse and avoid taking positions

- Everyone wants something they can circulate without blame

AI provides exactly that.

The result is an organization full of activity and starving for judgment.

The Hidden Cost of Slop

The real damage is not bad answers. It is signal collapse.

When everything looks reasonable:

- Urgency disappears

- Priorities blur

- Expertise becomes indistinguishable from verbosity

People stop trusting documents. Meetings become interpretation exercises. Decision latency increases.

Ironically, AI meant to save time ends up consuming more of it.

Why “Better Prompting” Will Not Save You

This is not a literacy problem. It is a filter problem.

Organizations invested heavily in tools that generate content and almost nothing in mechanisms that evaluate it.

Without clear standards for:

- What decisions a document must enable

- Who owns the recommendation

- What tradeoffs must be addressed

AI will continue to produce slop, even with perfect prompts.

What Practitioners Are Getting Wrong

Many practitioners think their job is to “clean up” AI output.

That is a trap.

Your value is not polishing language. It is reintroducing friction where it matters.

Good friction asks:

- What decision is this meant to support?

- What happens if we do nothing?

- Who is accountable for the call?

Slop hates friction. Good work requires it.

Push Back

Five ways to push back on the slop economy without becoming “anti-AI”:

- Demand a decision, not a document

Before reviewing anything, ask what decision it is meant to inform. - Name the tradeoffs explicitly

If a recommendation does not include downsides, it is not finished. - Assign ownership in the artifact itself

If no one’s name is attached to the call, it is slop. - Limit circulation until judgment is added

Drafts do not need wide audiences. Decisions do. - Reward clarity over completeness

Short, opinionated outputs beat exhaustive, neutral ones.

Final Thought

AI did not lower the bar for work. It revealed that many organizations quietly removed it years ago.

The practitioners who matter most in the AI era will not be the fastest producers.

They will be the ones who know how to filter, decide, and take responsibility when the tools make it easy not to.

ChangeGuild: Power to the Practitioner™

Now What?

Five ways to disrupt the slop economy from inside your role

1) Insert a “decision checkpoint” into your workflow

Before any AI-assisted output moves forward, pause and add one explicit line at the top: “This exists to support the following decision…”

If no decision can be named, stop the work. Slop thrives in motion without purpose.

2) Refuse to improve language until thinking is exposed

When reviewing AI-generated content, do not edit tone, clarity, or structure first.

Ask instead:

- What assumption is this making?

- What constraint is missing?

- What risk is being ignored?

Only once the thinking is visible should the writing be refined.

3) Collapse options into a forced recommendation

If an AI output presents multiple paths, require a final section titled: “What I would do and why.”

Even if you disagree, forcing a single recommendation restores accountability and surfaces judgment.

4) Slow down circulation on purpose

Do not forward AI-generated artifacts immediately.

Sit with them. Add context. Add a point of view.

Speed is what allows slop to spread. A short delay often reveals whether the content is actually useful.

5) Model friction publicly

In meetings and reviews, narrate your own process: “This part came from AI. This part reflects my judgment. This is the risk I am choosing to own.”

This makes discernment visible and gives others permission to do the same.

Frequently Asked Questions

What does “AI slop” mean in a workplace context?

AI slop is polished, plausible output that consumes attention but does not improve clarity or decisions. It often avoids commitment, skips tradeoffs, and shifts judgment back to the reader.

Is AI slop the same thing as hallucinations or incorrect answers?

No. Hallucinations are fact problems. Slop is a usefulness problem. Slop can be factually accurate and still waste time because it does not help anyone decide or act.

Why is AI slop showing up everywhere right now?

Because the cost of producing content collapsed while the cost of evaluating it did not. Organizations can now generate far more memos, summaries, decks, and plans than they can thoughtfully review.

How can I tell if an AI-generated deliverable is slop?

If it sounds confident but makes no recommendation, lists options without tradeoffs, avoids constraints, or cannot name the decision it supports, it is trending toward slop.

Will better prompting fix the slop problem?

Better prompting can improve clarity, but it will not solve slop on its own. Slop is driven by incentives and accountability gaps, not just weak inputs.

What is the fastest way to reduce slop on my team?

Require every artifact to state the decision it supports and to include a clear recommendation with tradeoffs and named ownership. Slop survives when no one has to own the call.

How do I push back on slop without sounding anti-AI?

Focus on outcomes, not tools. Ask for decision clarity, tradeoffs, and ownership. Frame it as protecting attention and speeding real decisions, not rejecting AI.

This post is free, and if it supported your work, feel free to support mine. Every bit helps keep the ideas flowing—and the practitioners powered. [Support the Work]